Non-linear models

KNN/K-Nearest Neighbors

Key: Find k nearest neighbors of the current node, and use them to decide its class. So:

- The definition of “near”?

- How to decide which

kshould we use? - After we have k neighbors, what rule should we follow to decide the current node’s class?

Distance Metric

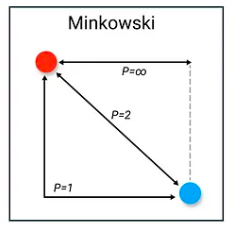

The Minkowski distance or distance is a metric in a normed vector space which can be considered as a generalization of other common distance metrics.

Assume variable and have dimension n, then:

|

|---|

| Minkowski distance (src) |

- When , , which is Manhattan Distance (in 2-d, it’s the sum of the legs).

- When , , which is Euclidean Distance (in 2-d, it’s the hypotenuse).

- When , , which is Chebyshev Distance (the greatest of difference between two vectors along any coordinate dimension).

How to get Chebyshev distance from Minkowski distance?

TODO

Parameter K Selection

-

Small K

- If neighbors are noises, the predictions tend to be wrong.

- The model tends to be complicated, and easy to overfit.

-

Large K

- Underfit

Usually we start with smaller k, and tune the parameter.

Classification Rule

Majority rule/The wisdom of crowds, and use 0-1 loss.

Implement: kd tree

TODO

Decision Tree

It could be seen as a set of if-else rules.

Since find the optimal decision tree is a np-complete problem, we use heuristic method for finding the sub-optimal dt.

Overall the steps are:

- Feature selection: Find the optimal feature, split the data by this feature.

- Build the decision tree: Repeat step1 until all the nodes are classified or we don’t have features.

- Trim: Trim the decision tree because by step2 we might overfit.

Feature Selection

Here are some common criteria for evaluating the feature importance.

Information Gain (aka MI)

Information gain is based on entropy. Since entropy is the measure of the amount of information that data carries, information gain is the difference between two amounts of information. One is before using the current feature to split the data, one is after using the current feature.

Here is the entropy of the dataset.

Information Gain Ratio

When using information gain to choose the feature, we would naturally choose the feature with more values. So we introduce information gain ratio to solve this problem.

Where is the entropy of feature in dataset . It’s similar to the way we calculate the entropy of the whole dataset, this time we only use data that has feature .

Where is the number of samples in the dataset.

Build the Tree

ID3 algorithm

Use information gain to choose the feature when spliting data

C4.5 algorithm

Use information gain ratio to choose the feature when spliting data

Tree Trim

TODO

How to apply tree models to classification/regression problems?

TODO

Ensemble Learning

Ensemble methods use multiple algorithms to obtain better predictive performance than could be obtained from any of the constituent learning algorithms alone.

Bagging/Bootstrap aggregating

What is bootstrap dataset? A bootstrapped set is created by selecting from original training data set with replacement. Thus, a bootstrap set may contain a given example zero, one, or multiple times.

To get a bootstrap dataset, we do a sampling with replacement, so an observation may be selected more than once.

What is aggregation? Inference is done by voting of predictions of ensemble members.

- For classification problems, use the voting results. If 2 labels tied, consider adding confidence.

- For regression problems, use average, eg simple average, or weighted average with weights of base models as the average weights.

Random Forest/RF: an implementation of bagging and decision tree

Use decision tree as the base model, and add a randomness for feature selection, which is choose n features from current m candidates, and select optimal feature based on these n features.

- : traditional decision tree

- : randomly select 1 feature to split the data

- : recommended choice

Overall, random tree has 2 randomness.

- Randomness in sample selection: because of bootstrap sampling, the dataset is sampled randomly.

- Randomness in feature selection: the optimal feature is not chosen from all the features, instead it’s chosen based on randomly selected feature candidates.

Advantage

Disadvantage

Boosting

Core idea: learn a bad base model first, then keep boosting the performance until reaching a perfect standard.

Adaboost/Adaptive Boosting

Steps:

- Calculate error rate : the sum of classifier weights that give wrong predictions.

- Calculate new weight : . Note here is inversely proportional to .

- Improve the boosted classifier:

where is a normalization factor,

Boosting Tree

Core: Use a tree to fit residuals.

The new classifier after each iteration is:

And the loss function is:

If we use MSE as the loss function, here we have:

Where is a constant that denotes the difference between the true value and predicted value by last round classifier, which is the residual , so now we have:

Which means every iteration, we train a tree to fit the residual.

But boosting tree is only valid when using MSE as the loss function, so we have GBDT instead.

GBDT/Gradient Boosting Decision Tree

Use negative gradient to approximate the residual, that is:

For MSE, this is the standard residual, for other loss function, this is an approximate for residuals.

Traditional Implementation

Iterate all the feature candidates, split data based on current feature, then calculate fitted residuals based on all data, . Then use loss function to calculate loss, and choose the feature with minimum loss as the feature to split the data.

So the time complexity of traditional implementation is proportional to the number of feature times the number of samples.

Regularization

Shrinkage/Learning Rate

where

Using small learning rates (such as ) yields dramatic improvements in models’ generalization ability over gradient boosting without shrinking (). However, it comes at the price of increasing computational time both during training and querying: lower learning rate requires more iterations.

Stochastic Gradient Boosting Tree/SGBT

The base model should be trained on bootstraped datasets. Use to denote the size of training set.

- : same as gradient boosting

- : recommended interval

- : most common choice

Number of Observations in Leaves

Limiting the minimum number of observations in trees’ terminal nodes.

Penalize Complexity of Tree

penality on the leaf values.

XGBoost/eXtreme Gradient Boosting

Xgboost is modified based on gbdt, where it added regularization and use a second order taylor series to get a more precise loss function.

Taylor series of on is below:

Assume the size of samples is , then the loss function is:

where are all the trees.

To simplify the process, we only use 1 sample, then use a second taylor series at , we have:

Use this result to , now we have:

Note:

- is the number of leaves at iteration

m - is the weight of leaf

j

Since:

- and are both constants, we could just ignore them

- is the sum of leaf weights at iteration

m, so:

where denotes all the samples that fall in leaf j

Now we have loss function as:

Calculate the derivative of loss function of , then we have:

Let derivative = 0, then we have optimal :

Then the minimum loss (with constants) is:

From the loss function formula we know the loss is related to the structure of the tree ( in the equation). So in order to find the minimum loss, we need to iterate all different structured trees, which is too time-consuming.

Consider a heuristic method, start with a single node, and then find the next feature to split the data. The criteria for selecting feature is, after spliting by this feature, the loss would decrease.

Loss reduction:

Tree generation based on pre-sorted features

If loss reduction > 0, then this feature should split the data, else we should prune. So everytime we find the maximum loss reduction to split the data.

To speed up the spliting, we calculate the feature reduction first:

and then sort them from largest to smallest.

Pros: fast speed, could run in parallel.

Cons:

- Need to iterate all the data to calculate loss reduction

- Need to store the sorted results

Note: Even xgb could run in parallel, it’s parallel in feature level, not in data level.

LightGBM

TODO